The Camera Offset Space: Real-time Potentially Visible Set Computations for Streaming Rendering

ACM Transactions on Graphics (SIGGRAPH Asia 2019)

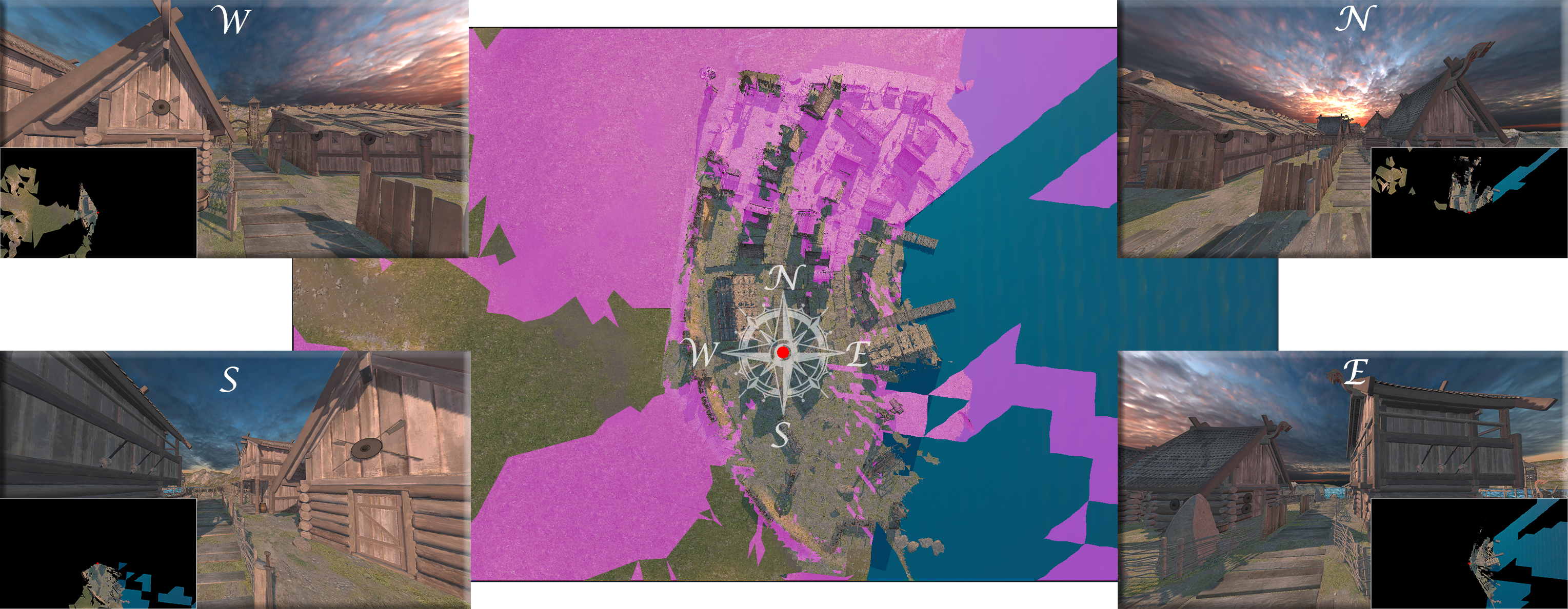

A birds-eye view on a full 360 degree potentially visible set (PVS) computed for a region around the camera from four reference views (in the corners) in an outdoor scene. Geometry labeled as invisible shown in magenta color, the red dot shows camera position in the scene. To construct the PVS, we collect all triangles that can cover a fragment in a per-fragment list and resolve the visibility in the proposed camera offset space. Each reference view contains a corner inset depicting its corresponding PVS---starting from top right, clockwise: North, East, South and West. The final PVS is constructed by joining these 4 sets. Novel views rendered with the PVS for view points around the reference location are complete and hole free.

Abstract

Potential visibility has historically always been of importance when rendering performance was insufficient. With the rise of virtual reality, rendering power may once again be insufficient, e.g., for integrated graphics of head-mounted displays. To tackle the issue of efficient potential visibility computations on modern graphics hardware, we introduce the camera offset space (COS). Opposite to how traditional visibility computations work---where one determines which pixels are covered by an object under all potential viewpoints---the COS describes under which camera movement a sample location is covered by a triangle. In this way, the COS opens up a new set of possibilities for visibility computations. By evaluating the pairwise relations of triangles in the COS, we show how to efficiently determine occluded triangles. Constructing the COS for all pixels of a rendered view leads to a complete potentially visible set (PVS) for complex scenes. By fusing triangles to larger occluders, including locations between pixel centers, and considering camera rotations, we describe an exact PVS algorithm that includes all viewing directions inside a view cell. Implementing the COS is a combination of real-time rendering and compute steps. We provide the first GPU PVS implementation that works without preprocessing, on-the-fly, on unconnected triangles. This opens the door to a new approach of rendering for virtual reality head-mounted displays and server-client settings for streaming 3D applications such as video games.

Materials

- Paper (Full Author's Copy) (25 MB)

- Supplemental material (16 MB)

Video

Citation

Jozef Hladky, Hans-Peter Seidel, Markus Steinberger

The Camera Offset Space: Real-time Potentially Visible Set Computations for Streaming Rendering

To appear in: ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2019)

@article{Hladky2019_COS,

author = {Jozef Hladky and Hans-Peter Seidel and Markus Steinberger},

title = {The Camera Offset Space: Real-time

Potentially Visible Set Computations for Streaming Rendering},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2019)},

year = {2019},

volume = {38},

number = {6},

article = {231},

doi = {10.1145/3355089.3356530}

}